Query delay

The query delay (or query customization) feature functions as an offset to your queries. Instead of requesting data for the last minute, Nobl9 can ask your data source for a specific time interval based on the Query delay to ensure SLI data consistency

Take the following example:

- Suppose it is

15:00 UTC: without a custom query delay; the Nobl9 agent would ask the data source for data from14:59-15:00. - If you set the query delay to 10 minutes, the Nobl9 agent will instead ask the data source for data from

14:49-14:50.

Setting a longer query delay can be helpful when your data source may not have a complete data set during the standard Nobl9 agent workflow. Setting a query delay can help with all kinds of SLI problems, for example:

-

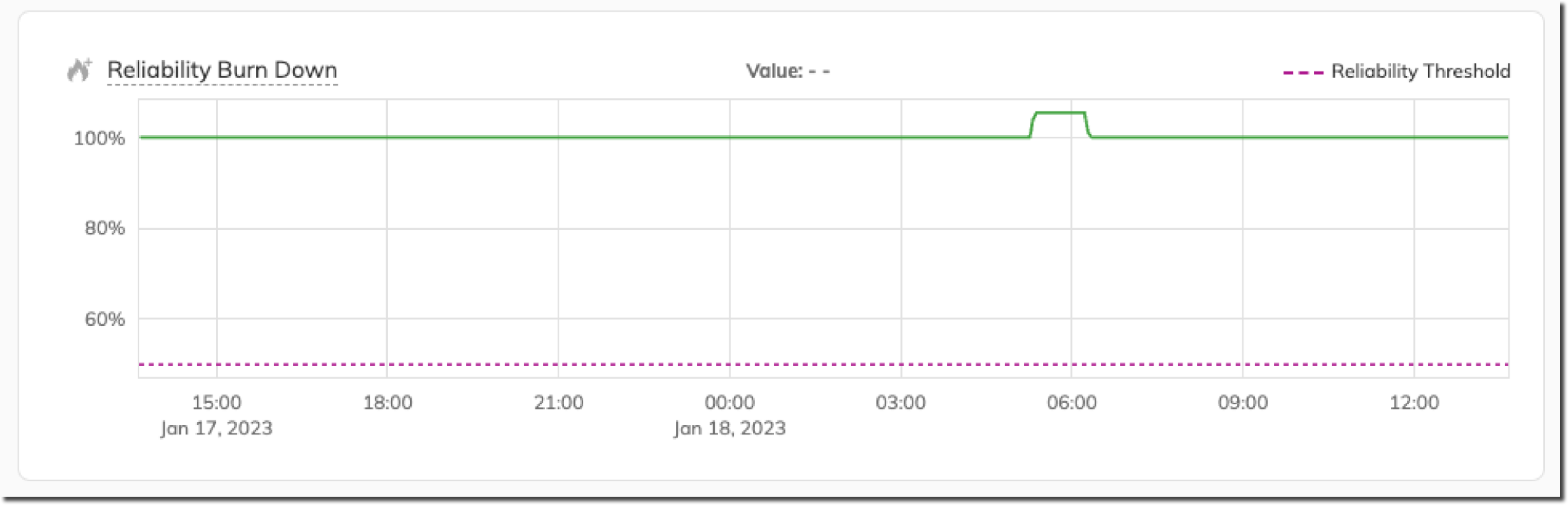

Reliability Burn Down chart indicating over 100%.

This might happen in cases when the Nobl9 agent hasn't collected some of the data points due to a delay greater than one minute, and your ratio metric is collecting more good data points than the total:

Image 1: Reliability Burndown Chart showing reliability >100% -

Differences in raw data between Nobl9 and a data source.

Sometimes the data in the observability platform may not be available. Specifying a longer Query delay allows you to pull data from a time further than one minute in the past.

You can also configure query delay via Agent's environment variables.

Configuring query delay

Configuring query delay in the UI

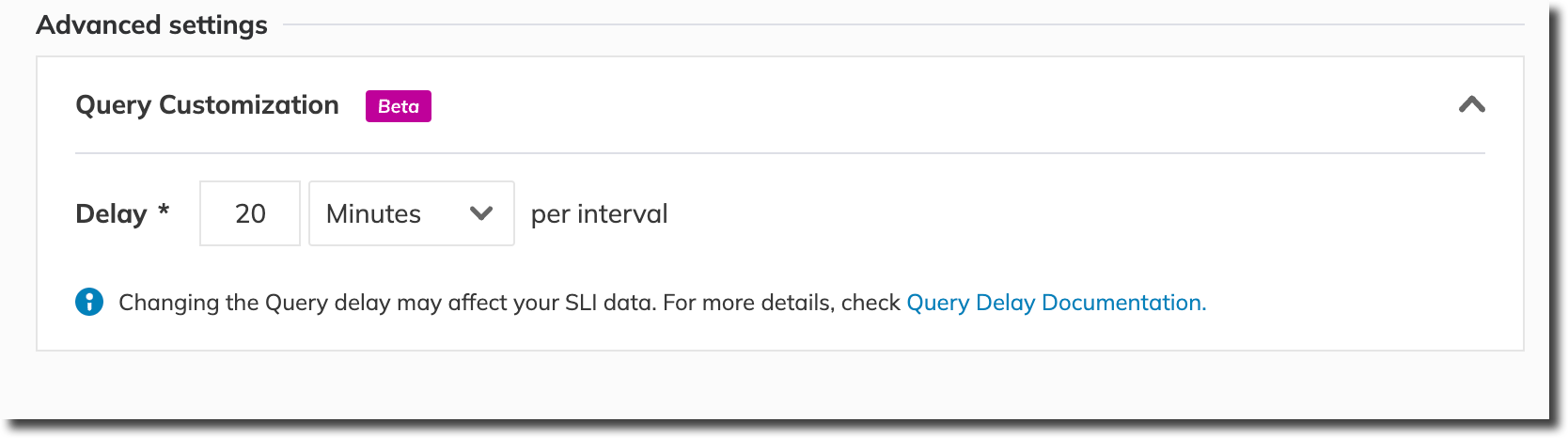

You can configure query delay in the Source Configuration Wizard in Integrations > Sources:

Enter the required value for the query delay and click the Save Data Source button to finish your configuration.

Configuring query delay via sloctl

You can configure query delay via sloctl by defining the spec[n].queryDelay.unit and spec[n].queryDelay.value fields in your YAML.

Refer to the required source documentation for more details. The following are YAML samples containing the configuration of query delay for Datadog's agent and direct connections:

- Agent YAML

- Direct YAML

Here's a YAML snippet with a defined queryDelay for Datadog agent:

- apiVersion: n9/v1alpha

kind: Agent

metadata:

name: datadog-agent

project: default

spec:

datadog:

site: com

sourceOf:

- Metrics

releaseChannel: beta # string, one of: beta || stable

queryDelay:

unit: Minute # string, one of: Second || Minute

value: 720 # numeric, must be a number less than 1440 minutes (24 hours)

status:

agentType: Datadog

Here's a YAML snippet with a defined queryDelay for Datadog direct:

apiVersion: n9/v1alpha

kind: Direct

metadata:

name: datadog-direct

displayName: Datadog direct

project: datadog-direct

spec:

description: direct integration with Datadog

sourceOf: # One or many values from this list are allowed: Metrics, Services

- Metrics

- Services

releaseChannel: beta # string, one of: beta || stable

queryDelay:

unit: Minute # string, one of: Second || Minute

value: 720 # numeric, must be a number less than 1440 minutes (24 hours)

datadog:

site: com

apiKey: "" # secret

applicationKey: "" # secret

If you select a value greater than 24 hours (only Minutes and Seconds are allowed) sloctl will return the following error:

Error: agent.yaml: Key: 'Agent.spec.QueryDelayDuration' Error:Field validation for 'QueryDelayDuration' failed on the 'queryDelayDurationBiggerThanMaximumAllowed' tag

We recommend selecting more sensible query delay values. For most data sources, query delay should be set to at most 15 minutes, but it can vary per specific use case.

Default values for query delay

The following table lists default values for query delay for all available data sources (for Agent and Direct connection methods). m stands for minute(s), s stands for second(s).

| Source | Default query delay |

|---|---|

| Amazon Redshift | 30s |

| Amazon CloudWatch | 1m |

| AppDynamics | 1m |

| Azure Monitor beta | 5m |

| Azure Monitor managed service for Prometheus beta | 0m |

| BigQuery | 0s |

| Datadog | 1m |

| Dynatrace | 2m |

| Elasticsearch | 1m |

| Google Cloud Monitoring | 2m |

| GrafanaLoki | 1m |

| Graphite | 1m |

| InfluxDB | 1m |

| Instana | 1m |

| LogicMonitor beta | 2m |

| NewRelic | 1m |

| OpenTSDB | 1m |

| Pingdom | 1m |

| Prometheus 1 | 0s |

| ServiceNow Cloud Observability | 2m |

| Splunk | 5m |

| Splunk Observability | 5m |

| Sumo Logic | 4m |

| ThousandEyes | 1m |

- Maximum query delay is 1440 minutes or 24 hours.

- Maximum query delay for Splunk Observability is 15 minutes.

Impact of query delay on the SLI data

Editing query delay

Editing query delay might lead to inconsistencies and periods of incorrect results in Nobl9.

Reducing the query delay from a higher value to a lower value may result in data gaps. For instance, if the query delay is currently set to 10 minutes, and you reduce it to 2 minutes, Nobl9 will miss 8 minutes of data, as the periods do not overlap.

Furthermore, increasing the query delay may lead to duplicated points. Ideally, data sources would respond with points with precisely identical timestamps, but the Nobl9 agent cannot guarantee this. What might happen is that those duplicated points could be dropped by Nobl9 agent (since this specific period was already queried).

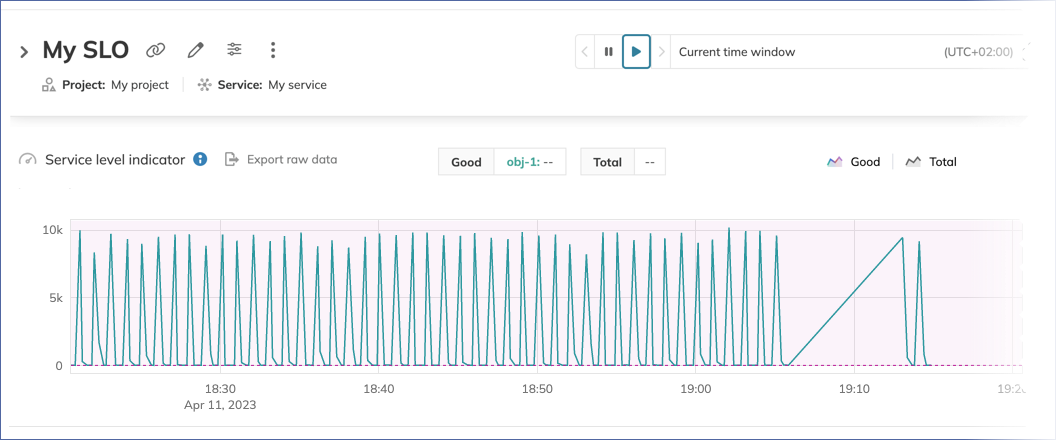

The following SLI Graph illustrates data gaps caused by shortening the query delay from 4 to 15 minutes:

To ensure the health of your SLOs, do not change query delay too often.

As the best practice, we advise figuring out the correct value of query delay on some test data and then using this information to set a proper custom query delay per each Agent or Direct production instance.

Changes in query delay will affect all your SLOs attached to the data source where you've customized it.

Impact on alerting

When you set query delay to a high value, your SLO alerting capabilities will also be delayed.

Since the query delay acts as a data offset, setting an extended query delay will affect Nobl9's alerting capabilities. After setting query delay, Nobl9 agent will retrieve data with a specific delay - and so live processing and alerting is based on not-so-live data.

For example, if you set the query delay to 10 minutes, Nobl9 can only generate alerts for that period - it can't predict the future, even though it is the "present state" of your data source.

Impact on Replay

In the Custom query delay setting, high query delay (in this context, over 15 minutes) values might lead to inconsistencies and short periods of incorrect results for Replay-based SLOs. It may happen that for narrow Replay time windows (in this context, depending on a data source, but less than 3 hours, for example), Replay could finish importing historical data faster than the query delay set in the Agent and, in return, overwrite a small portion of the data already gathered by Replay.

Query delay best practices

Since query delay takes place at the Agent or Direct level, when you change its value, it is applied to all SLOs on that data source.In such cases, we recommend creating a new data source with the customized query delay to support only the SLOs requiring it (for example, those SLIs with long transaction times). We don't recommend changing the configuration of your existing data source and altering all existing SLOs by doing so.

You can quickly move an SLO to a new data source using sloctl. To achieve this, copy the YAML configuration from SLO Details > View YAML in the UI, modify the metricSource[n].name attribute in the YAML file, and then apply the new YAML using the sloctl apply command.

For more details on copying and moving SLOs, check the SLO FAQ and troubleshooting.