Alerting

Effective SLO alerting forms the foundation of reliability engineering, but managing alerts becomes challenging with multiple service level objectives, varying thresholds, and notification channels. Without proper configuration, alert fatigue can undermine monitoring effectiveness.

Nobl9's alert management solution simplifies these complexities by providing intuitive building blocks for reliability alerts. Our platform helps engineers configure precise SLO monitoring parameters, customize notification channels, and implement intelligent alerting logic—all designed to enhance reliability while preventing alert fatigue.

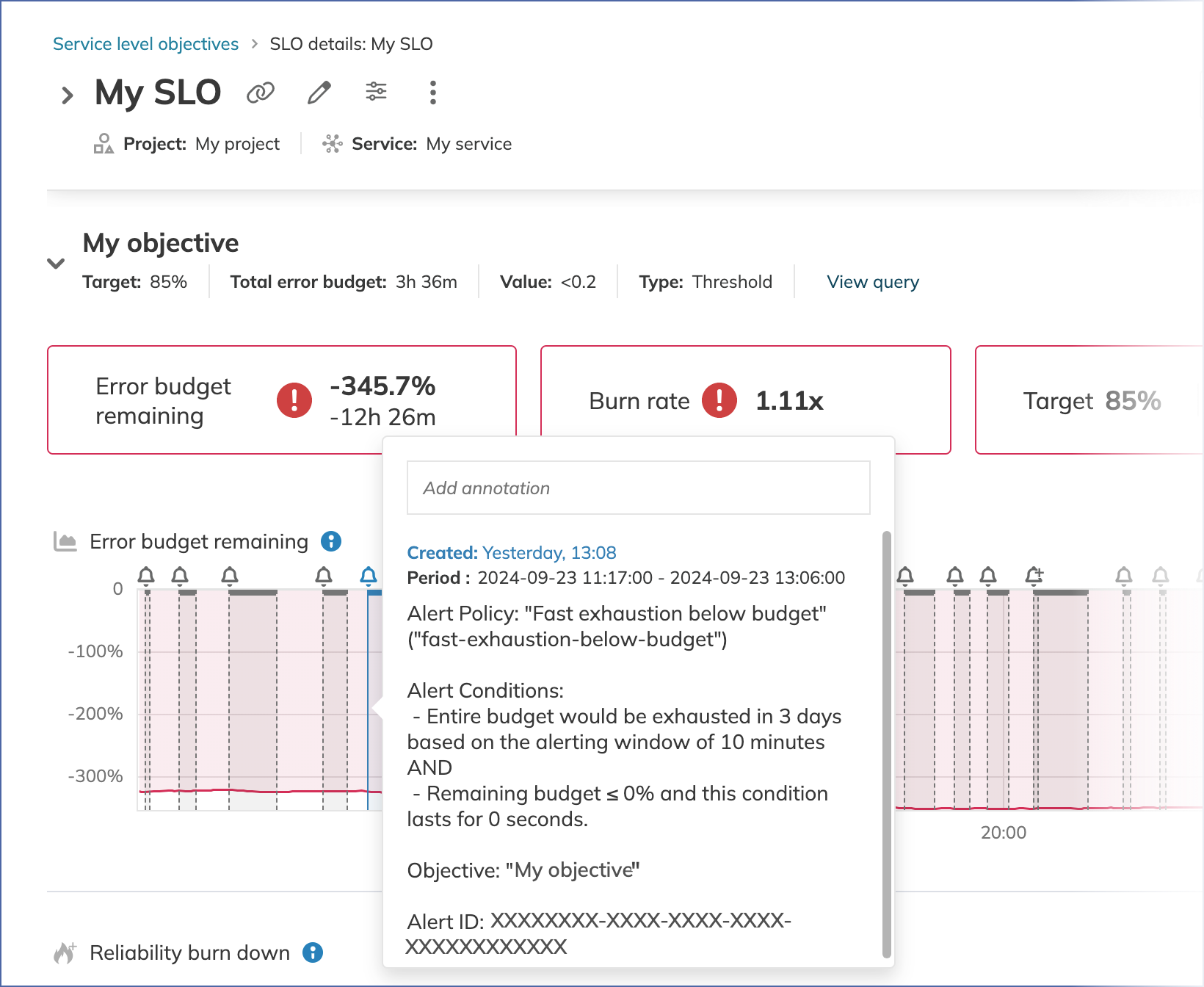

Nobl9 system annotations automatically record alert events on your SLO objective charts. Each time an alert is triggered or resolved, a visual marker appears on the charts. These annotations remain even when alert policies are silenced, providing a complete historical record of your alert activity.

Alert policies

To configure the alerting logic for Nobl9, you can use alert policies that contain the necessary parameters. These parameters include:

-

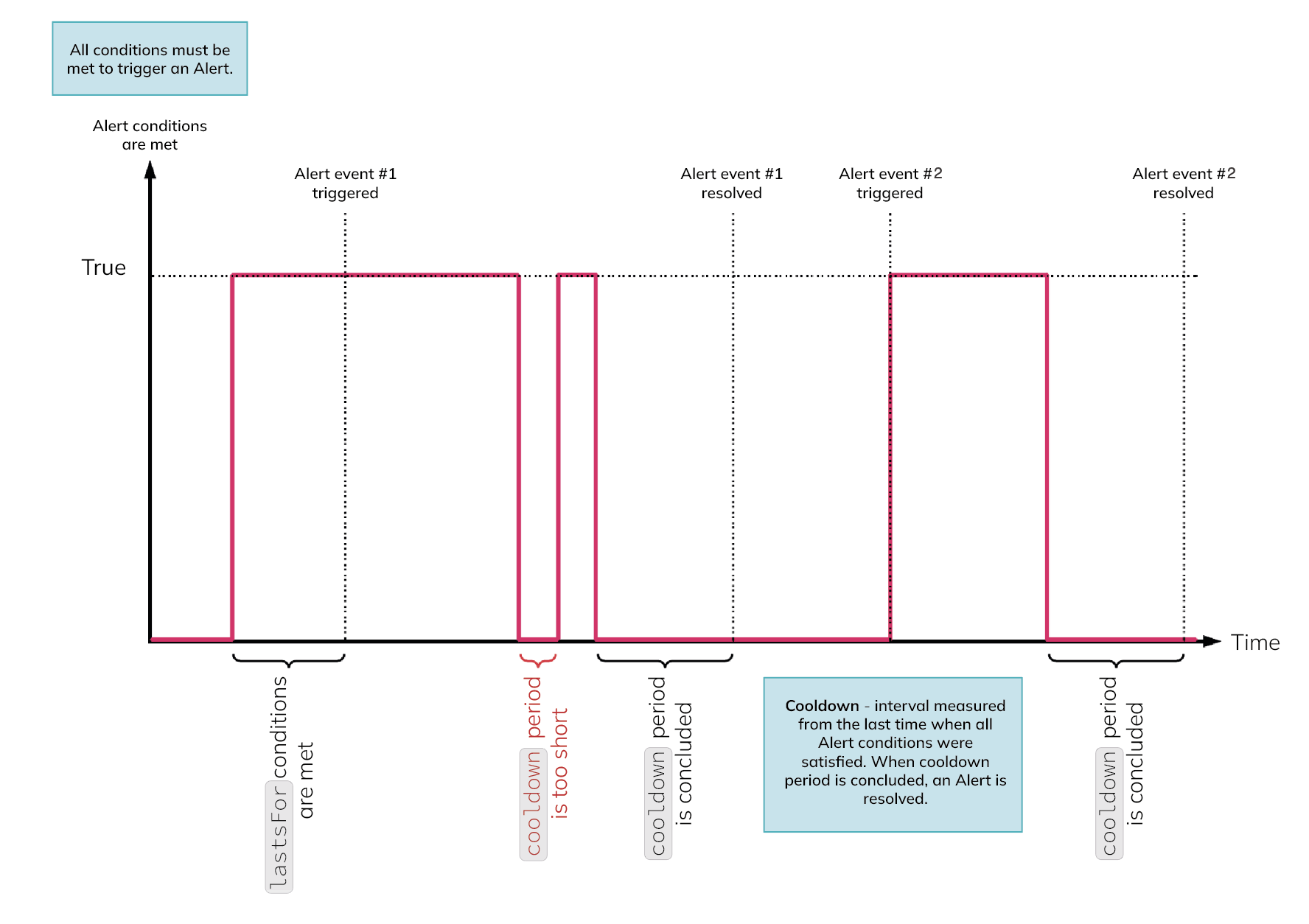

Alert conditions: rules that define when an alert will be triggered. If an Alert Policy has multiple conditions, all of them must be satisfied for Nobl9 alert to trigger an alert. You can think of it as a logical operator

ANDbetween the specific conditions. -

Cooldown period: is a defined amount of time that must pass after the first condition in a policy is no longer met. Cooldown helps to prevent alert fatigue when rules are set to evaluate over smaller time windows and the SLI is particularly volatile. Once the cooldown period has successfully passed, the current alert is considered resolved and a new one can be triggered.

tipYou can use the

AlertSilencefeature to mitigate ongoing alert fatigue. Read more in Silencing alerts. -

Alert methods: notification channels that will be used to send the alert. Nobl9 will send such notification to all alert methods configured in the Alert Policy.

tipYou can create alert methods independently of the alert policy (in the Alerts tab in Nobl9 UI, via

sloctlor Nobl9 Terraform Provider), and you can reuse them across multiple alert policies.You can link alert methods of your choice to alert policy at the moment of its creation or link them later. Read more about Alert methods.

-

Additional settings, such as severity, labels, and metadata (name, description, and project)

You can create alert policies on the Alerts view or using sloctl.

Follow YAML guide to see how to set it up through YAML.

Lifecycle of an alert

Each alert has its lifecycle associated with the configured alert policy and the objective against which it is evaluated. Such a lifecycle corresponds to specific statuses.

Alert statuses

There are three possible statuses for an alert policy:

- Triggered

- Resolved

- Canceled

An alert policy triggers alerts independently of other policies for each objective of any SLO it is linked to. A single alert policy can generate multiple alerts for an SLO, but only one can be in a Triggered state for each objective in this SLO. This means no new alerts from a specific alert policy can be triggered while there is a Triggered alert for this objective.

If an SLO has multiple alert policies, each has its alert policy lifecycles and can trigger alerts independently.

After an alert policy generates an alert, it's in a Triggered state. It will remain in this state until the alert conditions are no longer satisfied and the cooldown period has passed. When the alert conditions are no longer satisfied, this alert moves to a Resolved state.

If you change the configuration of an alert policy or a new calendar window has started for the calendar-aligned time window SLOs, the alert will move to a Canceled state.

When an alert event assumes the resolved status within the duration of AlertSilence, Nobl9 will send you an all-clear notification.

Read more in Silencing alerts.

Cooldown period

Cooldown period was designed to prevent sending too many alerts. It is an interval measured from the last time stamp when all alert policy conditions were satisfied.

Each SLO objective keeps its cooldown period separately from other objectives. If multiple alert policies are configured, each cooldown is evaluated independently and does not impact the lifecycle of other alert policies.

Assumptions for cooldown

In that case, the alert will be resolved 10 minutes after the incident is resolved, even if the cooldown period is set to 5 minutes.

The diagram below shows a simplified lifecycle of an alert policy with a defined cooldown period and lastsFor parameter.

This diagram represents a scenario with lastsFor parameter set.

As an alternative to lastsFor, you can use alerting_window to alert immediately to an incident based on the specified evaluation window.

Learn more about those parameters in Nobl9 observation model for alerting

Check out the Alerting - use case in SLOcademy for the complex examples.

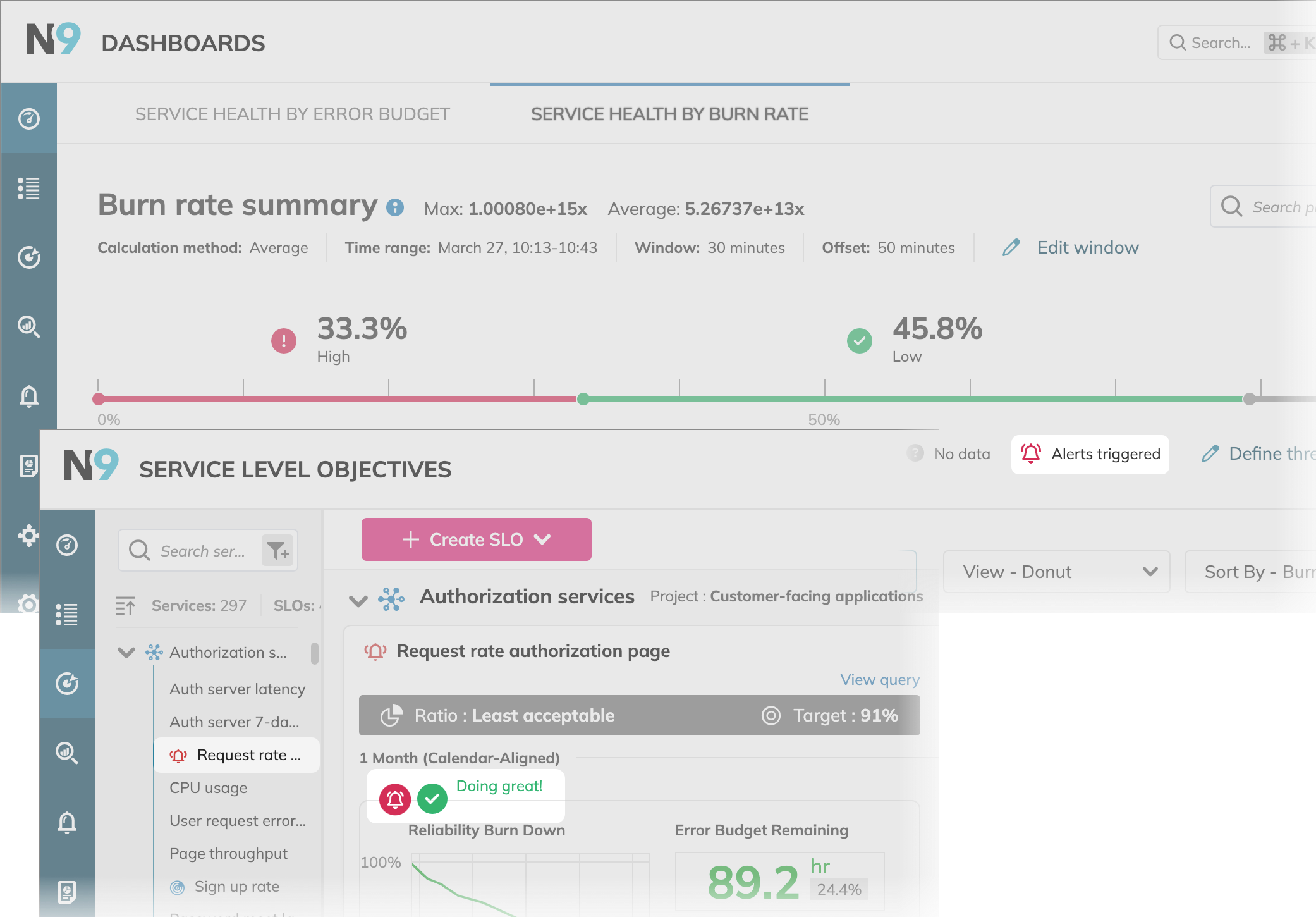

Displaying triggered alerts in the UI

When an SLO triggers alerts, Nobl9 marks this SLO in the following locations:

Retrieving triggered alerts in sloctl

Using sloctl, you can retrieve information when an alert stopped to be valid. To do so, run the following command in sloctl:

sloctl get alerts

For more details on the sloctl get alerts command, see sloctl User guide.

- triggered alert

- resolved alert

Here's an example of a triggered alert that hasn't been resolved yet:

apiVersion: n9/v1alpha

kind: Alert

metadata:

name: 6fbc76bc-ff8a-40a2-8ac6-65d7d7a2686e

project: alerting-test

spec:

alertPolicy:

name: burn-rate-is-4x-immediately

project: alerting-test

service:

name: triggering-alerts-service

project: alerting-test

severity: Medium

slo:

name: prometheus-rolling-timeslices-threshold

project: alerting-test

status: Triggered

objective:

displayName: Acceptable

name: objective-1

value: 950

triggeredClockTime: "2022-01-16T00:28:05Z"

Here's an example of a resolved alert:

apiVersion: n9/v1alpha

kind: Alert

metadata:

name: 6fbc76bc-ff8a-40a2-8ac6-65d7d7a2686e

project: alerting-test

spec:

alertPolicy:

name: burn-rate-is-4x-immediately

project: alerting-test

resolvedClockTime: "2022-01-18T12:59:07Z"

service:

name: triggering-alerts-service

project: alerting-test

severity: Medium

slo:

name: prometheus-rolling-timeslices-ratio

project: alerting-test

status: Resolved

objective:

displayName: Acceptable

name: objective-1

value: 950

triggeredClockTime: "2022-01-18T12:53:09Z"

If you describe infrastructure as code, you might also consider defining the alert methods with the same convention. You can find more details in our Terraform documentation.

Adding labels to alert methods�

You can add one or more labels to an alert policy. They'll be sent along with the alert notification when the policy’s conditions are met.