Budget drop

In the realm of creating actionable alert policies, the goal is to simplify the process by introducing intuitive condition types. The drop in the SLO's error budget is a straightforward metric that enables timely alerts when your SLO is at risk.

The Budget drop condition can be useful when:

- You want to be alerted when a drop in an observed period is above that acceptable level

- You don’t know how to calculate the exact burn rate state in which an alert policy should alert you

Use case example

Let's take a look at a recent incident monitored by an SLO, where the error budget dropped by 40%. Determining the precise average burn rate value that should trigger an alert at the right moment can be complex. However, by observing the impact of incidents on the error budget drop, you can better understand and configure alert conditions effectively.

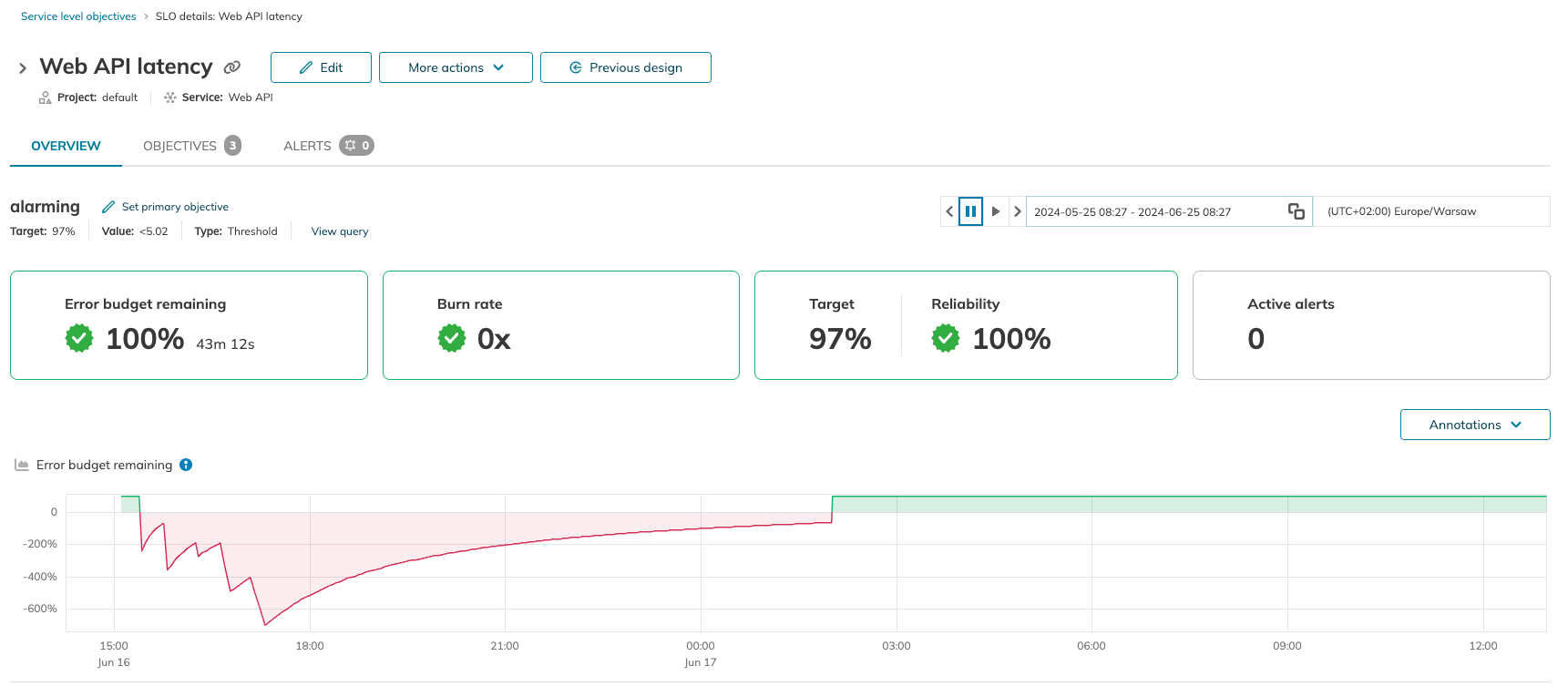

Observing budget drop you can readily identify similar incidents on the Error budget remaining chart in the SLO details view:

Now, consider the following scenario: you have a Service Level Objective with a 28-day rolling time window and three objectives:

- Acceptable with a target of

99.9%(error budget =0.1%) - Poor with a target of

99.8%(error budget =0.2%) - Alarming with a target of

99.7%(error budget =0.3%)

Suppose a recent incident has consumed approximately 0.1% of the Acceptable objective budget within 2 hours. To ensure proactive monitoring, you need to set up an alert condition–and the easiest way to monitor this SLO's state is by observing its budget drop. Based on this assumption, you can configure alerts to trigger if the error budget drops by 0.08% or more within the same 2-hour window, allowing you to respond quickly if a similar situation occurs again.

Overview of the condition

The budget drop condition measures a relative drop in the error budget expressed in the percentage values that can be observed on the Remaining error budget chart:

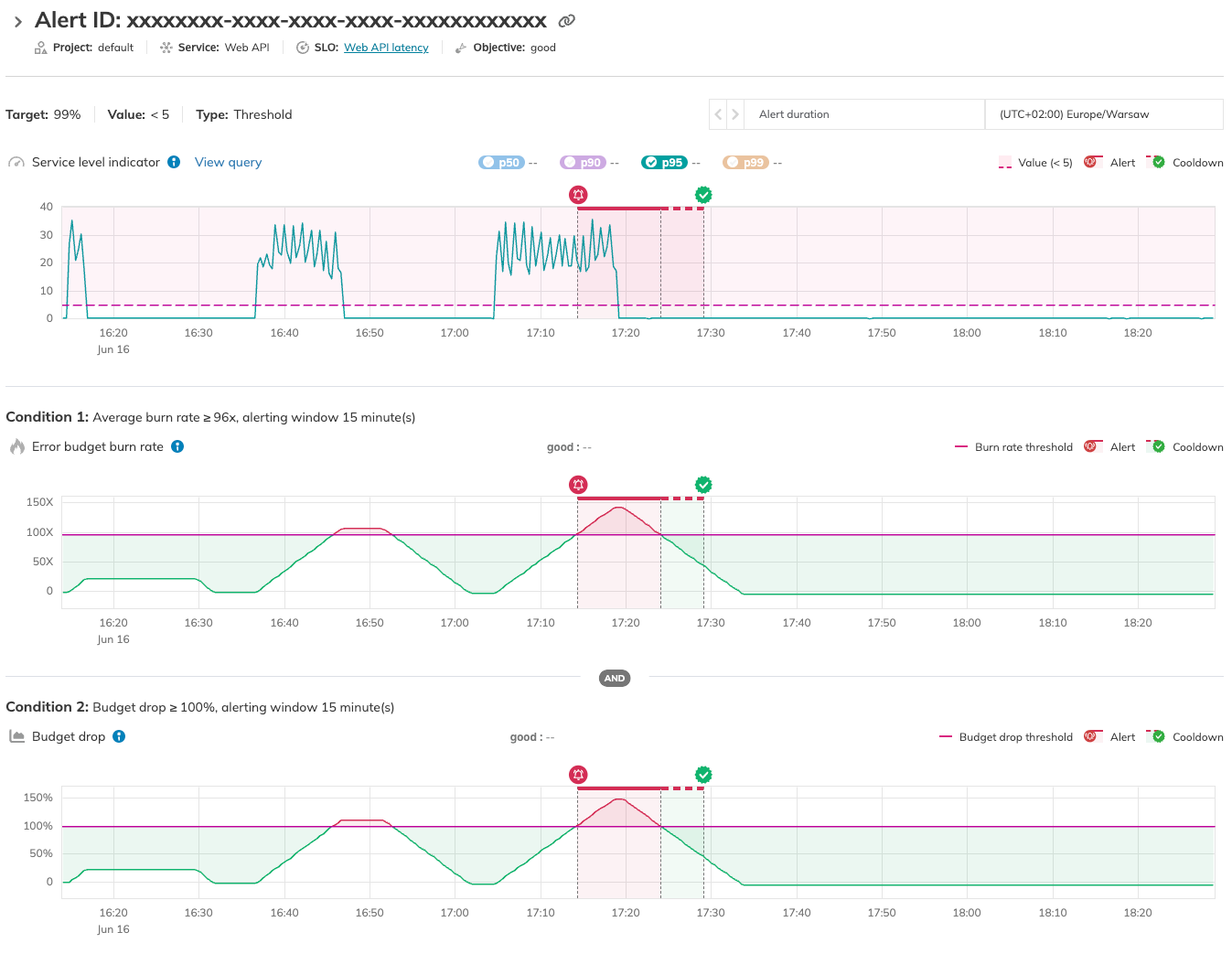

You can investigate the reasons behind alerts that were triggered for this condition on the Budget drop chart in the alert details view:

Budget drop and burn rate

You can define the Budget drop condition as a simple alternative to configuring fast and slow burn policies based on the Average burn rate condition:

-

Fast budget drop (

5%of error budget over the last1h): budget drop ≥5%based on the alerting window of1h -

Slow budget drop (

0.5%of error budget over the last2h): budget drop ≥0.5%based on the alerting window of2h

The following charts show a correlation between the budget drop and the average burn rate is conditions in the Alert details view:

Check the calculator below to convert budget drop to average burn rate.

YAML definition

The following are YAML samples for configuring slow and fast burn by budget drop:

- Fast burn

- Slow burn

apiVersion: n9/v1alpha

kind: AlertPolicy

metadata:

name: fast-budget-drop

displayName: Fast budget drop (10% over 15 min)

spec:

description: The budget dropped by 10% over the last 15 minutes and is not recovering.

severity: High

coolDown: "5m"

conditions:

- measurement: budgetDrop

value: 0.1

alertingWindow: 15m

op: gte

apiVersion: n9/v1alpha

kind: AlertPolicy

metadata:

name: slow-budget-drop

displayName: Slow budget drop (5% over 1h)

spec:

description: The budget dropped by 5% over the last 1 hour and is not recovering.

severity: Low

coolDown: "5m"

conditions:

- measurement: budgetDrop

value: 0.05

alertingWindow: 1h

op: gte

Budget drop and average burn rate calculator

Fill in the fields to the left to calculate budget drop and average burn rate.