Data export

Nobl9 Enterprise users can export their SLO and SLI metrics to AWS S3 or Google Cloud Storage bucket in a CSV format.

The data export feature allows Nobl9 Enterprise users to combine their SLO data with business metrics such as revenue, conversions, or other KPIs to quantify the impact of the reliability of their services.

Feature overview

You can export data to:

-

Merge Nobl9 data with data from in-house telemetry systems to activate holistic business metrics reporting.

-

Conduct audits and post-mortem analyses to investigate when the Services started to break, when the error budget got exhausted or whether anyone reacted when the alert was triggered.

-

Improve internal reporting by recreating reports, graphs, or historical data.

-

Enhance debugging by analyzing whether their system generates correct data on their end and whether that data is sent correctly to Nobl9.

The data export job runs every 60 minutes (half past every hour) and takes about one minute to run, so you may need to wait up to 61 minutes to see the output.

Nobl9 outputs time-series data and SLO details into a single CSV file. The files are compressed using gzip, and no encryption is applied to them. On the storage level for S3 buckets, Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3) is used.

Prerequisites

A bucket storage

You must own an S3 bucket or a Google Cloud Storage bucket to use data export. Connecting it to Nobl9 will allow you full access to your Nobl9 data to manage your data retention policies or storage cost.

Trusted IPs

To ensure the security of your network and to control who has access to it,

it may be necessary to list Nobl9 IP addresses as trusted.

So data leaving Nobl9 can reach its intended destination without being blocked.

IP addresses to include in your allowlist for secure access

app.nobl9.com instance:- 18.159.114.21

- 18.158.132.186

- 3.64.154.26

us1.nobl9.com instance:- 34.121.54.120

- 34.123.193.191

- 34.134.71.10

- 35.192.105.150

- 35.225.248.37

- 35.226.78.175

- 104.198.44.161

Configuration

- AWS S3

- GCP

To connect Nobl9 to your S3 bucket, you must configure an IAM Role that Nobl9 can Assume Role to gain permissions to feed the data to the bucket.

To do that:

- Run the following command in sloctl:

sloctl aws-iam-ids dataexport

This command returns the External ID that Nobl9 will use to assume the IAM role when executing data export.

As of sloctl version 0.0.93, the sloctl get dataexport --aws-external-id command is marked as deprecated.

-

Download the AWS Terraform module from here - (this is a private repository, available upon request).

-

Enter the variables for Terraform. For example, create the file

input.auto.tfvarsin the root module with the following content:

aws_region = "<AWS_REGION_WHERE_TO_DEPLOY_RESOURCES>" # Region where Terraform provisions the S3 bucket

external_id_provided_by_nobl9 = "<EXTERNAL_ID_FOR_ORGANIZATION>" # You can obtain the ID from sloctl; see the section above the snippet for details

s3_bucket_name = "<S3_BUCKET_FOR_N9_DATA_NAME>" # Specify the desired name for the bucket. If omitted, Nobl9 will generate a random bucket name

# Optionally, you can add tags for every created terraform resource

tags = {

"key": "value"

}

# Other available variables

# Specify the desired name for the IAM role, which gives Nobl9 access to the created bucket

# When omitted, Nobl9 will use the default name "nobl9-exporter"

iam_role_to_assume_by_nobl9_name = "<NAME_OF_CREATED_ROLE_FOR_N9>"

# Specify whether all objects should be deleted from the previously created S3 bucket when using terraform destroy

# This will allow destroying the non-empty S3 bucket without errors

# When omitted, Nobl9 will use the default value "false"

s3_bucket_force_destroy = <S3_BUCKET_FOR_N9_FORCE_DESTROY>

- You will then need to create a YAML file with the

kind: DataExportdefined, named e.g., nobl9-export.yaml

- apiVersion: n9/v1alpha

kind: DataExport

metadata:

name: "kube permissible name"

displayName: S3 data export

project: default

spec:

exportType: S3

spec:

bucketName: "bucket name"

roleArn: arn:aws:iam::<AWS_ACCOUNT_ID>:role/<NAME_OF_CREATED_ROLE_FOR_N9>

- Apply the YAML files with the

sloctl applycommand:

sloctl apply -f nobl9-export.yaml

To connect Nobl9 to a Google Cloud Storage bucket:

- Set up your Google Cloud Storage bucket using the following terraform code:

resource "google_storage_bucket" "reports" {

name = "bucket name"

location = "location"

storage_class = "storage class"

}

resource "google_storage_bucket_iam_binding" "binding" {

bucket = google_storage_bucket.reports.name

role = "roles/storage.objectAdmin"

members = [

"serviceAccount:n9-data-export-service-account@nobl9-prod-app.iam.gserviceaccount.com"

]

}

To connect to the GCP storage bucket, you can use the existing role visible in the snippet above roles/storage.objectAdmin`.

Or, you can also create a new role and assign the following minimum required permissions to it:

storage.objects.createstorage.objects.get

-

This bucket needs to allow access to Nobl9 service accounts

-

For GCP, you have to grant access to:

n9-data-export-service-account@nobl9-prod-app.iam.gserviceaccount.com- The code snippet above defines this in the

google_storage_bucket_iam_bindingresource in themembersattribute.

- The code snippet above defines this in the

-

- Then, create a YAML file with the

kind: DataExportdefined, named, for example,nobl9-export.yaml.

- apiVersion: n9/v1alpha

kind: DataExport

metadata:

name: "kube permissible name"

displayName: GCP data export

project: default

spec:

exportType: GCS

spec:

bucketName: "bucket name"

In the bucket, you have to add a file called n9_organizations with a unique identifier of your organization.

- Apply YAML files with the

sloctl applycommand:

sloctl apply -f nobl9-export.yaml

You can get existing configuration of your data exports using the sloctl get dataexports command.

Limitations

A single organization can configure up to two data exports (1 data export per export type), which means an organization can have one data export configured for AWS S3, and the other one that exports data to the GCP.

Currently, Nobl9 doesn't support exporting files to a specified folder or path. By default, all files with data export from Nobl9 go to the AWS S3/GCP bucket you defined while configuring data export.

Currently, Nobl9 doesn't support configuring data export on a per-project basis. Nobl9 always exports all the data from an organization, no matter what project name is defined in the metadata.

Once your data is exported, you can filter your data by projects in the preferred data store: AWS S3 or GCP.

Output schema

Schema of the exported data

| Column name | Data type | Description | Nullable? |

|---|---|---|---|

| timestamp | DATETIME | Event Time in UTC | N |

| organization | STRING | Organization identifier | N |

| project | STRING | Project name | N |

| measurement | STRING | Measurement type. One of the following values:raw_metric, good_total_ratio, instantaneous_burn_rate, good_count_metric, bad_count_metric, total_count_metric, remaining_budget_duration, remaining_budget, ratio_extrapolation_window.For exact usage, see Sample queries | N |

| value | DOUBLE | Numeric value, the meaning depends on the measurement | N |

| time_window_start | DATETIME | Time window start time in UTC, precalculated for the measurement | N |

| time_window_end | DATETIME | Time window end time in UTC, precalculated for the measurement | N |

| slo_name | STRING | SLO name | N |

| slo_description | STRING | SLO description | Y |

| error_budgeting_method | STRING | Error budget calculation method | N |

| budget_target | DOUBLE | Objective's target value (percentage in its decimal form) or composite's target value. | N |

| objective_display_name | STRING | Objective's display name | Y |

| objective_value | DOUBLE | Objective value or composite's burn rate condition value | N |

| objective_operator | STRING | The operator used with raw metrics or composite's burn rate condition operator. One of the following values: lte - less than or equal lt - less than get - greater than or equal gt - greater than | Y |

| service | STRING | Service name | N |

| service_display_name | STRING | Service display name | Y |

| service_description | STRING | Service description | Y |

| slo_time_window_type | STRING | Type of time window. One of the following values: Rolling, Calendar | N |

| slo_time_window_duration_unit | STRING | Time window duration unit. One of the following values: Second Minute Hour Day Week Month Quarter Year | N |

| slo_time_window_duration_count | INT | Time window duration | N |

| slo_time_window_start_time | TIMESTAMP_TZ | Start time of time window from the SLO definition. This is a DateTime with the timezone information defined in the SLO. Only for Calendar-Aligned time windows. | N |

| composite | BOOLEAN | Indicates whether the row contains composite-related data (true means a row contains composite data). Refers to both legacy composite SLOs and Composites 2.0. | N |

| objective_name | STRING | Objective's name | N |

| slo_labels | STRING | Labels (in key:value format) attached to the SLO, separated by a comma without spaces. | Y |

| slo_display_name | STRING | SLO display name | Y |

Sample queries

Service level objective details

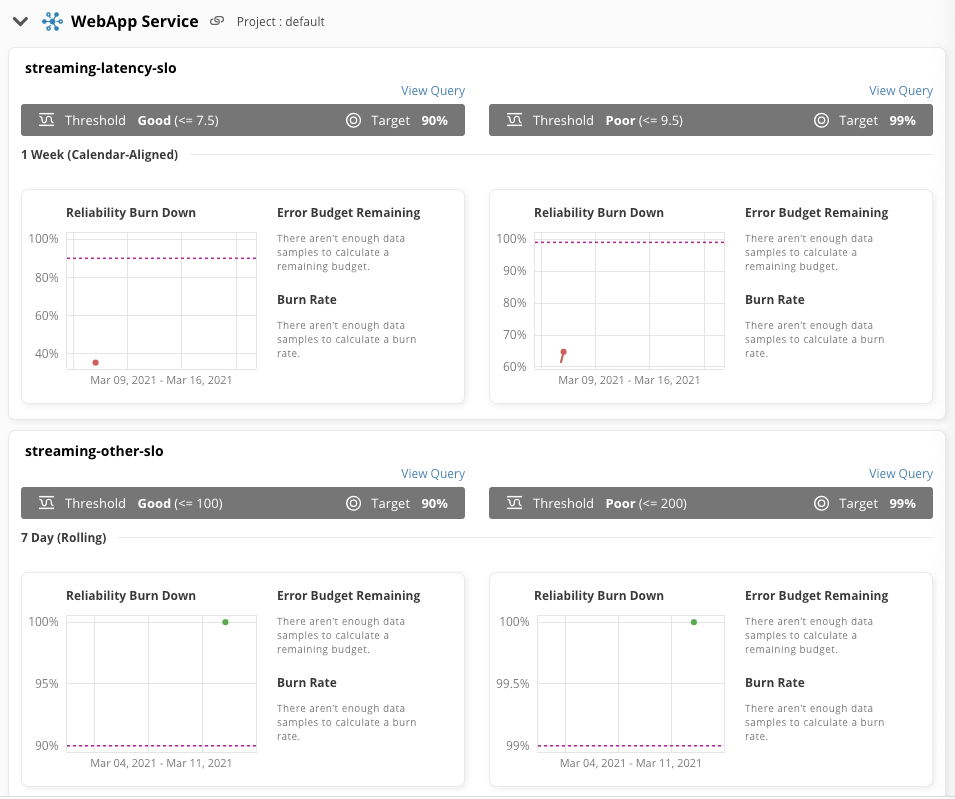

Let's say we have a sample service WebApp Service with two SLOs (streaming-latency-SLO and streaming-other-slo), where each SLO contains two objectives:

Given the CSV data is imported do the data warehouse (i.e., Snowflake) we can retrieve similar information using the following query:

select distinct

service_display_name,

service,

project,

slo_name,

objective_name,

objective_value,

budget_target * 100 as target

from nobl9_data

order by service, slo_name

Service level indicator query

Count metric example

select timestamp, value, measurement from nobl9_data where

slo_name = 'streaming-latency-slo'

and (measurement = 'good_count_metric' or measurement = 'bad_count_metric' or measurement = 'total_count_metric')

and objective_value = 1

and timestamp >= '2022-03-10 16:00:00'

and timestamp <= '2022-03-10 16:30:00'

and project = 'default'

order by timestamp, measurement

Raw metric example

select timestamp, value from nobl9_data where

slo_name = 'newrelic-server-requests-slo'

and measurement = 'raw_metric'

and objective_value = 7.5

and objective_operator = 'lte'

and timestamp >= '2022-03-10 16:00:00'

and timestamp <= '2022-03-10 16:30:00'

and project = 'default'

order by timestamp

Reliability burn down query

select timestamp, value from nobl9_data where

measurement = 'good_total_ratio'

and slo_name = 'streaming-latency-slo'

and objective_value = 7.5

and timestamp >= '2022-03-10 15:00:00'

and timestamp <= '2022-03-10 17:00:00'

and project = 'default'

order by timestamp

Error budget burn rate query

select timestamp, value, objective_value from nobl9_data where

measurement = 'burn_rate'

and slo_name = 'streaming-latency-slo'

and timestamp >= '2022-03-10 15:00:00'

and timestamp <= '2022-03-10 17:00:00'

and project = 'default'

order by timestamp

Error budget remaining

Remaining budget duration in seconds

select timestamp, value from nobl9_data where

measurement = 'remaining_budget_duration'

and slo_name = 'streaming-latency-slo'

and objective_value = 9.5

and project = 'default'

order by timestamp desc

limit 1

Remaining budget percentage (percentage value in decimal form)

select timestamp, value from nobl9_data where

measurement = 'remaining_budget'

and slo_name = 'streaming-latency-slo'

and objective_value = 9.5

and project = 'default'

order by timestamp desc

limit 1

Burn rate (last x minutes)

select

value as burn_rate,

(select value / 60 from nobl9_data where

measurement = 'ratio_extrapolation_window'

and slo_name = 'streaming-latency-slo'

and objective_value = 9.5

and project = 'default'

order by timestamp desc

limit 1) as extrapolation_window_in_minutes

from nobl9_data where

measurement = 'burn_rate'

and slo_name = 'streaming-latency-slo'

and objective_value = 9.5

and project = 'default'

order by timestamp desc

limit 1