SLO reviews Enterprise

Service reviews in Nobl9 provide comprehensive SLO oversight and deliver actionable insights for continuous SLO improvement. Reviews help organizations keep their SLOs relevant, ensuring effective monitoring of service performance.

SLO governance and reviews are mutually connected: through automated data integrity tracking and service health analysis, teams can conduct systematic reviews to identify any inconsistencies in their SLOs and implement proactive service management strategies.

Review scheduling is available only in the Nobl9 Enterprise Edition.

Reviews in a nutshell

- Set a review at the service level

- Reviews are scheduled for services and applied to all SLOs they contain.

- Moving SLOs to services with a review schedule applies the review settings to the moved SLOs.

- Nobl9 tracks the review progress and provides details on review debt, frequency, and statuses.

- Configure repeated reviews

- Set the required cycle frequency, which defines the review due dates.

- You can configure your Custom cycle frequency using the rrule in the iCalendar format.

- Monitor the review debt

- A dedicated widget on the SLO oversight dashboard highlights overdue and dusty SLOs across services.

- Stay aware of review details

- Schedule reviews at least for essential services.

- Assign users responsible for these services—they can act as a point of contact if any issues with a service appear.

- Keep the review deadlines concise and tailored to your business objectives.

- Ensure To review SLOs are reviewed before the review due date.

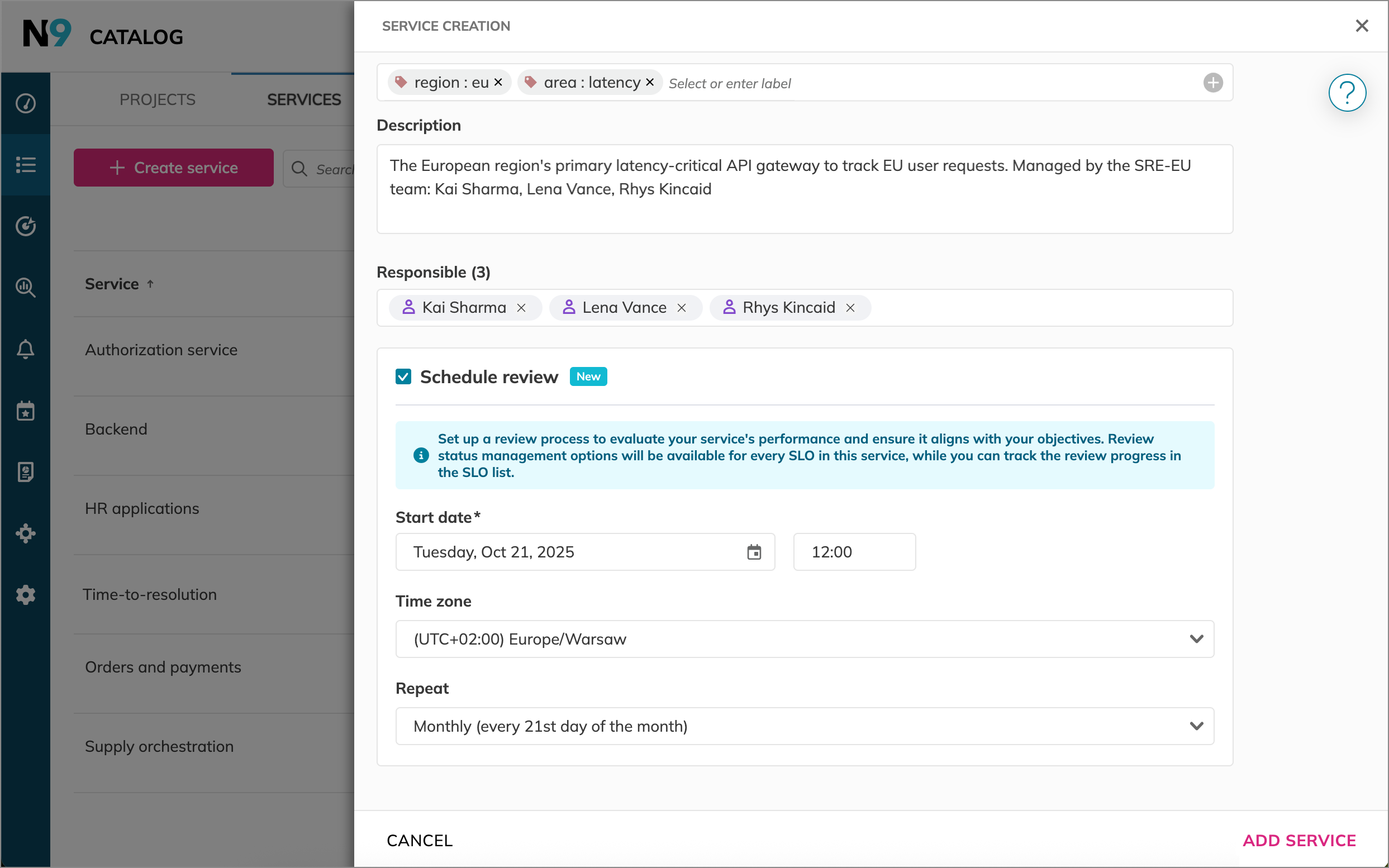

Scheduling a review

To schedule a review, open the service wizard. Select Schedule review and configure the schedule start, time zone, and repeat options:

The review deadline depends on the Repeat settings: the due date matches the start date of the next cycle. For example, if the schedule starts on October 17 and repeats weekly, the due dates will be as follows:

| 1st due date | 2nd due date | 3rd due date | 4th due date |

|---|---|---|---|

| October 17 | October 24 | October 31 | November 7 |

To customize the frequency of repetition, enter the recurrence rule in the iCalendar format (RRULE).

Need help? Use the rrule tool to generate your rule.

Once the review is scheduled, the To review status is applied to all SLOs in the service.

Reviewing SLOs

When reviewing an SLO, you may want to:

- Verify the SLO's budget-sensitive settings

- Check for data anomalies

Refer to the SLO guides where we explain budget-critical settings in detail.

Make the necessary fixes. Once done, set the SLO's status to Reviewed.

Tools for SLO reviews

| Tool | Description |

|---|---|

| SLI Analyzer | Pinpoint reasonable targets, assess incoming data, and tailor the time window and budgeting method for your SLO |

| Replay | Retrieve historical data for an SLO after you correct its configuration |

| Budget adjustments | Exclude events that might skew SLO performance data if these events aren't reflecting your service's actual reliability |

| Query checker | Validate that your SLOs for Datadog, Dynatrace, and New Relic are working correctly |

| Data source event logs | Diagnostic logs from an SLO's data source available for data sources connected using the direct method |

| Agent metrics | Verify that an SLO's data source is collecting data correctly. They are available for data sources connected using the agent method |

On each review status change, Nobl9 automatically generates an SLO annotation of the Review note category. These annotations fall into the User type, and you can edit them.

As a best practice, we recommend adding a note after every transition in review status.

SLO annotations support Markdown.

If a review is not needed for an individual SLO, you can skip it. The status of such an SLO becomes Skipped. It won't count toward the overdue SLOs number.

An SLO with the To review status that hasn't been reviewed before the due date becomes Overdue. Overdue SLOs affect SLO quality. Their status doesn't change with a new review cycle or after editing the review schedule. To address this, review such an SLO and transition in to Reviewed.

When no review is scheduled for the service, all its SLOs have the Not started review status. You can still review such SLOs individually and transition them to Reviewed.

Status transitions

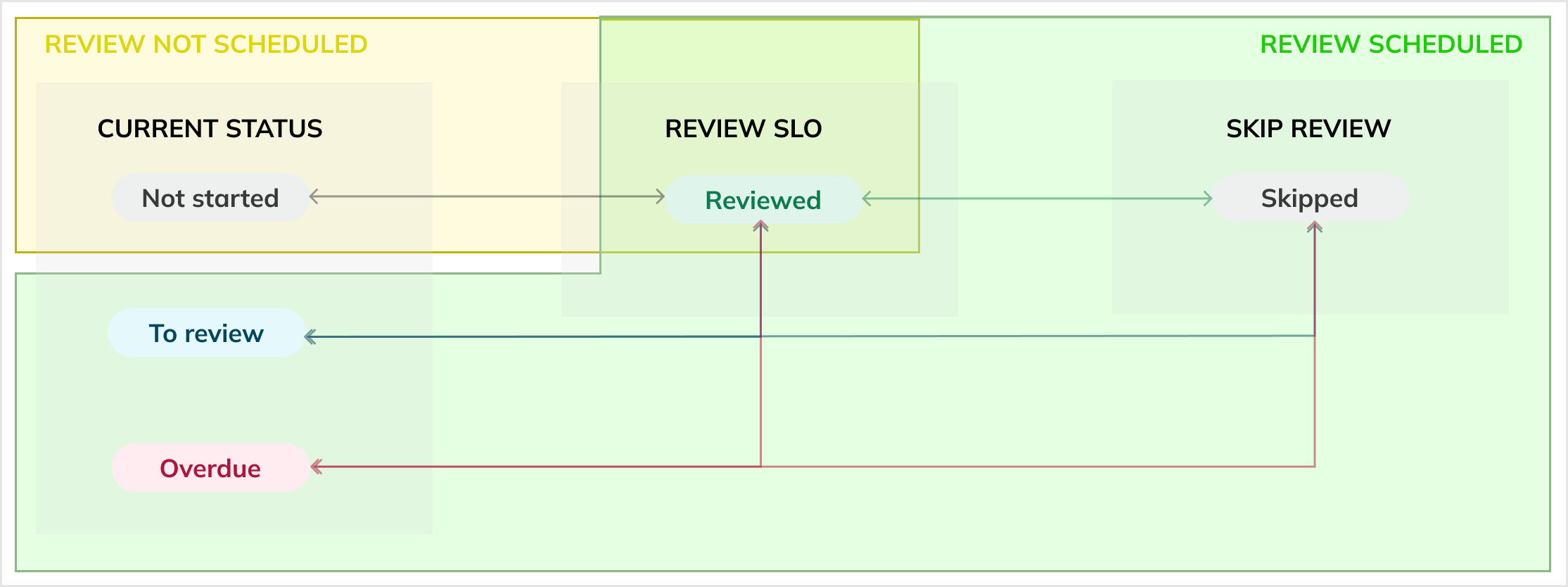

SLOs can be of five review statuses.

The initial status is Not started, indicating that the SLO's review has not been scheduled for its corresponding service and that the SLO hasn't been reviewed.

Once a review is scheduled, on its first due date, all Not started SLOs automatically become To review.

Status transitions in a nutshell

An SLO's review status can change either manually or automatically, based on the following:

- The existence of a review schedule enables automatic transitions

- The current status of the SLO determines which transitions are possible

- Certain statuses, like Reviewed and Skipped, can only be set manually

- Manual status transitions

- Automatic status transitions

Manual status transitions depend on two factors: whether a review is scheduled for the SLO's service, and the current status of the SLO.

The table below outlines the manual status transitions that are permitted, based on whether a review is scheduled. It is possible to change the status more than once within a single review cycle.

Table: Allowed manual status transitions

| Is review scheduled? | From | To |

|---|---|---|

| No | Not started / notStarted | Reviewed / reviewed |

| Yes | To review / toReview | Reviewed / reviewed |

| Yes | To review / toReview | Skipped / skipped |

| Yes | Skipped / skipped | Reviewed / reviewed |

| Yes | Overdue / overdue | Reviewed / reviewed |

| Yes | Overdue / overdue | Skipped / skipped |

When no review is scheduled for a service, its not reviewed SLOs have the Not started (notStarted) status.

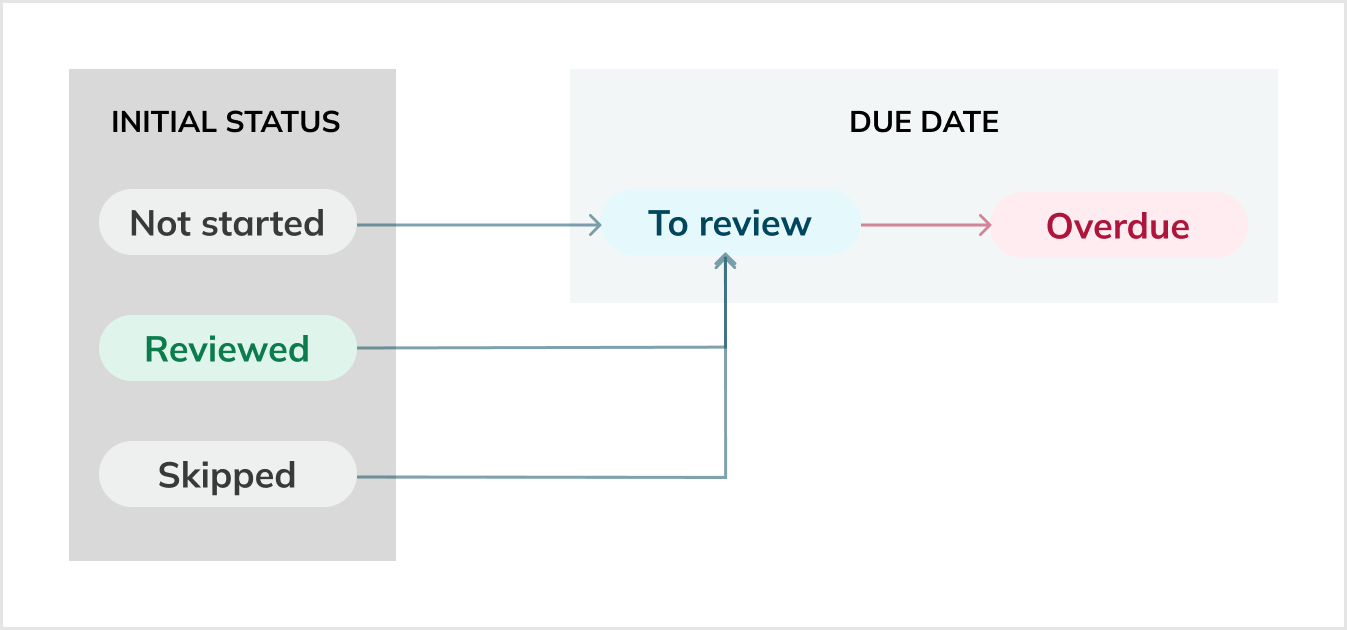

Once the review is scheduled, Nobl9 automatically updates the review status of SLOs according to the review schedule cadence:

- Not started, Skipped, Reviewed -> To review

- On the schedule start date, all SLOs become To review (

toReview). For new schedules: the due date is set to the first occurrence of the schedule pattern. - For subsequent cycles, all Reviewed (

reviewed) and Skipped (skipped) SLOs from the completed cycle automatically become To review at the start of the new cycle (the next due date).

- On the schedule start date, all SLOs become To review (

- To review -> Overdue

- Any To review SLOs not reviewed by the due date become Overdue (

overdue). - The Overdue status indicates a scheduled review was missed.

- An Overdue SLO falls out of the automatic transition logic. It will not change status automatically in subsequent cycles until a user manually transitions it to Reviewed or Skipped.

- Any To review SLOs not reviewed by the due date become Overdue (

Status transition reference

Table: Example of a biweekly review cycle with the start date on November 18

| Starting status | Status on November 18 (auto) | During the cycle (manual) | Status on December 2 (auto) | During the cycle (manual) | Status on December 16 (auto) |

|---|---|---|---|---|---|

| Not started, Skipped, Reviewed | To review | Status updated to Skipped or Reviewed | To review | Not reviewed, nor skipped | Overdue |

| To review | Overdue | Not reviewed, nor skipped | Overdue | Status updated to Skipped or Reviewed | To review |

Table: Possible review status transitions

| Status before | Scenario | Status after | Transition type |

|---|---|---|---|

| Not started | Add a review schedule; start date is now or earlier | To review | Auto |

| Not started | Review an SLO without the schedule | Reviewed | Manual |

| To review, Skipped, Overdue | Review an SLO | Reviewed | Manual |

| To review, Overdue | Skip an SLO review | Skipped | Manual |

| To review | Move an SLO to a service without a review schedule | Not started | Auto |

| To review, Overdue | New review cycle starts; SLO hasn't been reviewed | Overdue | Auto |

| Skipped | New review cycle starts | To review | Auto |

| Reviewed | New review cycle starts | To review | Auto |

Table: Scenarios not resulting in status transition

| Status | Scenario |

|---|---|

| Not started | Set a review schedule with the start date in the future |

| All statuses | • Move an SLO to a service with a review due date in the future • Defer a review due date |

| Reviewed | • Discard a review schedule • Move an SLO to a service without a scheduled review |

| Overdue | • New review cycle starts • Modify a review schedule |

Recommendations for an SLO review

Based on industry-accepted SRE best practices, we recommend considering the following key areas when performing an SLO review:

-

SLO performance and error budget analysis

- Was the SLO met?

This is the most fundamental check. Was the service reliable enough over the review period? - How much of the error budget was consumed?

- High consumption

If you're close to exhausting the budget, why? Was it due to a single major incident or an accumulation of many minor issues? Analyze the consumption trend to determine where to focus engineering efforts. - Low/No consumption

If you're consistently consuming very little of your error budget, is the SLO too loose? An overly loose SLO fails to provide a useful signal and can obscure minor issues. It might be time to tighten the target.

- High consumption

Hint: Check for Constant or No burn data anomalies detected for this SLO. - Was the SLO met?

-

SLO definition and relevance

- Is this still the right SLO?

Does it accurately reflect the user experience? For example, if you're measuring latency, is it still the most critical indicator of user satisfaction, or has another metric (like success rate) become more important? - Is the SLO target still appropriate?

Is it still meaningful for the business and users? Has the service's criticality changed? A more critical service may require a stricter SLO, while a less critical one may not justify the effort needed to maintain a high target. - Does the measurement time window make sense?

Is the current period still the right one for assessing performance?

Hint: Use the SLI Analyzer to reevaluate the target, values, and the time window. - Is this still the right SLO?

-

Alerts and incident response

- Did the SLO alerts fire when expected?

If the error budget was significantly impacted, did you receive timely alerts? If not, consider tuning the alert policies applied to the SLO. - Were there any near-misses?

Were there periods of high error budget burn that didn't trigger an alert but should have? - What was the outcome of incidents related to this SLO?

Review the post-mortems for any incidents that consumed error budget. The SLO review is the perfect time to ensure that action items from these post-mortems are being implemented.

Hint:- Revisit your alerting conditions to ensure they trigger appropriately.

- Check if any data anomalies have been detected on your SLO.

- Did the SLO alerts fire when expected?

-

User and business context

- Is there a correlation between error budget burn and user-facing issues?

Check if periods of high error budget burn correlate with an increase in customer support tickets, negative feedback, or other business metrics. If there's no correlation, your SLO might be reflecting the wrong thing. - What is the business impact of the current level of reliability?

Is the business happy with the service's performance? The answer to this question is the ultimate test of your SLO's efficacy.

Hint: Dive into SLO configuration details with our SLO guides section. - Is there a correlation between error budget burn and user-facing issues?

An SLO review is a powerful, proactive process. It helps ensure that your SLOs are not just numbers, but are actively driving engineering decisions, prioritizing work, and ensuring your service meets user expectations.

Useful links

ServicesNobl9 resources

ServicesNobl9 resources

Reliability and error budget calculationsSLO guides

Reliability and error budget calculationsSLO guides

SLO calculationsSLO guides

SLO calculationsSLO guides

Calendar-aligned SLOsSLO guides

Calendar-aligned SLOsSLO guides

Rolling SLOsSLO guides

Rolling SLOsSLO guides

SLO managementSLO guides

SLO managementSLO guides

Budgeting methodsTags

Budgeting methodsTags

rruleiCalendar specification

rruleiCalendar specification

SLO oversight fundamentalsSLO oversight

SLO oversight fundamentalsSLO oversight

Data anomaliesSLO oversight

Data anomaliesSLO oversight

Service Health dashboardDashboards

Service Health dashboardDashboards

SLO oversight dashboardDashboards

SLO oversight dashboardDashboards

Increasing SLO sensitivity to incidentsSLO guides

Increasing SLO sensitivity to incidentsSLO guides

Project-level rolesAccess management

Project-level rolesAccess management